文章导航

研一上系统优化中期作业,优化求解。

Project Requirement

Give a non-quadratic nonlinear objective function and linear inequality constraints (at least two).

Select a computational method (primal or dual) for constrained optimization you learn in the class.

Show your computational results and corresponding analysis.

Submit the typed report.

Attach your program

Problem

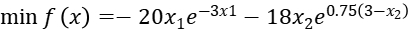

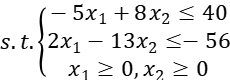

Consider the problem below:

We solve this problem by using Lagrangian Relaxation Method.

Main method

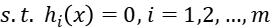

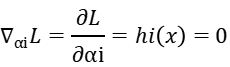

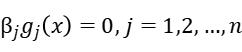

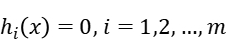

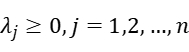

When we use the Lagrangian Relaxation Method, we describe the problem as below, the i-th equality constrain is expressed as  while the i-th inequality constrain is expressed as

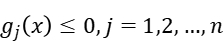

while the i-th inequality constrain is expressed as

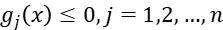

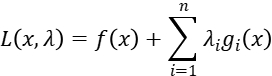

So the unconstrained optimization problem can be described as:

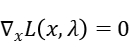

We can construct the Joseph-Louis Lagrange operator function, then the necessary conditions for the optimal solution can be obtained.

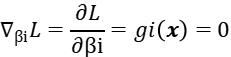

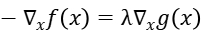

It can be found that when the constrained plane is tangent to the equipotential surface, the gradient orthogonality on the equipotential line and the gradient orthogonality on the constrained plane are both on the same tangent plane, so the two gradients are expressed linearly. In other words, we can express each other with a coefficient, which we usually call the Lagrangian multiplier.

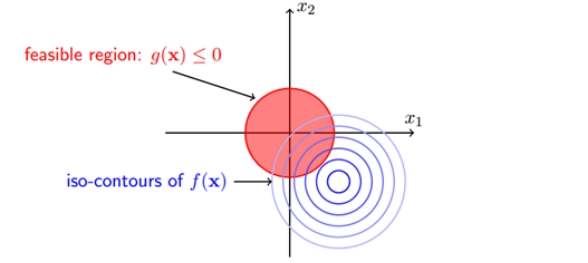

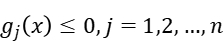

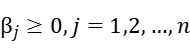

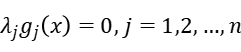

When the constrained area contains the original feasible solution of the objective function, and the feasible solution of the constrained is still inside the constrained area at this time, corresponding to the case of g(x)<0, then the constrained condition does not work; when the constrained area is not When the original feasible solution of the objective function is included, the feasible solution falls on the boundary g(x)=0 after adding constraints. The following figure describes the two cases respectively. The right figure shows that the feasible solution with the constraint will fall on the boundary of the constraint region.

So we know that in either case the expression below always holds:

If λ≠0, this means that the feasible solution x falls on the boundary of the constrained region. At this time, the feasible solution should be as close as possible to the unconstrained solution, so on the constrained boundary, the negative gradient direction of the objective function should be far away from the constrained region Towards the unconstrained solution, the gradient direction of the constraint function is exactly the same as the negative gradient direction of the objective function:

This solution also holds in equation constrains, thus we get the KKT conditions:

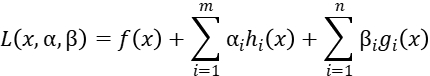

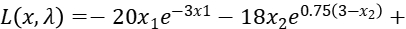

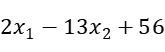

Our problem has non-quadratic nonlinear objective function and linear inequality constraints. So we can rewrite the unconstrained optimization problem as:

Where  equal to the

equal to the  above. The KKT conditions can be rewrite as:

above. The KKT conditions can be rewrite as:

Results and analysis

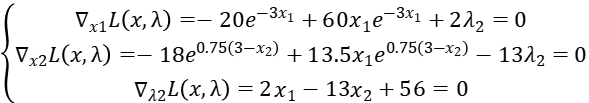

Step1: Write the Lagrange function:

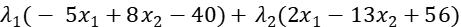

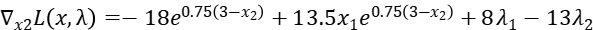

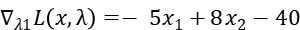

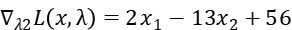

Step2: Get the partial derivative of  :

:

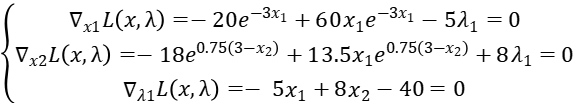

Step3: Relax constrain2, that means  .

.

We get the equation:

Solve this equation, we get: x1 = 0.157380, x2 = 5.098362,

= -1.316836,  = -20.983589,

= -20.983589,

Constrain2:  = -9.963951 < 0

= -9.963951 < 0

The results meet constraint2 and x1,x2 > 0, but  < 0 doesn’t meet KKT condition (4), so they are *not* optimal solutions.

< 0 doesn’t meet KKT condition (4), so they are *not* optimal solutions.

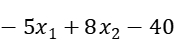

Step4: Relax constrain1, that means  .

.

We get the equation:

Solve this equation, we get: x1 = 0.252336, x2 = 4.346513,

= 1.139798,  = -30.866020,

= -30.866020,

Constrain1:  = -6.489574 < 0

= -6.489574 < 0

The results meet constraint1, x1,x2 > 0 and  > 0 meets KKT condition (4), so they are optimal solutions.

> 0 meets KKT condition (4), so they are optimal solutions.

Step5: Conclusion

We obtain that when the point is (0.252336, 4.346513), the objective function reaches the minimum value: -30.866020.

Test1

We use function fmincon in Matlab and function minimize in Python to solve the nonlinear programming. The results are written below:

In Matlab, we get the optimal solution is (0.2523, 4.3465), the minimum value is -30.8660.

In Python, we get the optimal solution is (0.252332, 4.346513), the minimum value is -30.866020.

So the results we get is correct!

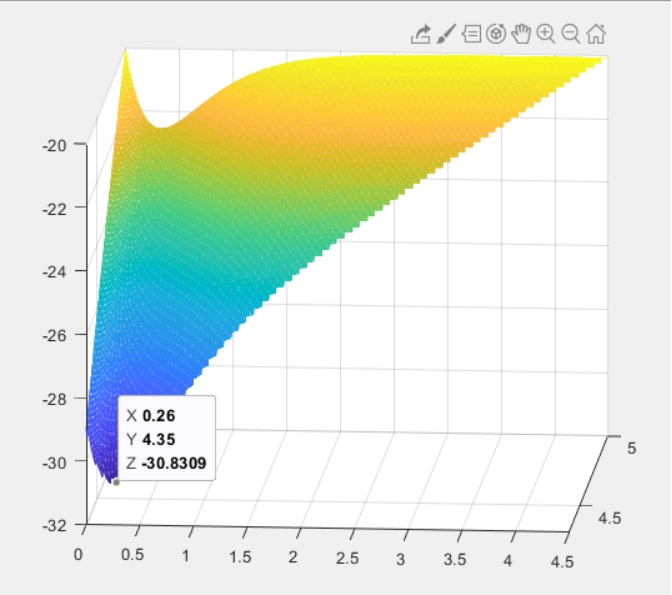

Test2

We draw the corresponding 3D picture of the objective function, points witch don’t satisfy the constrains were deleted. The minimum point is very close to (0.26, 4.35), and the minimum value is close to -30.8309.

Program

Matlab program

1 | x = 0:.01:5;y = 0:.01:5; |

Python program

1 | from scipy.optimize import minimize |